If you’ve had a chance to review the XenDesktop 7 PowerShell SDK documentation, you might have noticed a few new snap-ins that provide the site interactions for the new services included with XenDesktop 7 (as part of the FlexCast Management Architecture). These new snapins are the designated as V1 on the cmdlet help site, and include StoreFront, Delegated Admin, Configuration Logging, Environment Tests, and Monitoring.

Out of these new services, the Environment Test Service sounds the most appealing to me, as it provides a framework to run pre-defined tests and test suites against a XenDesktop 7 site. However, I found that the SDK documentation didn’t provide much/any guidance on using this snap-in, so I thought I’d share a quick rundown on the meat of this new service, along with some sample scripts using the main cmdlets.

The most basic function of this service is to run predefined tests against various site components, configurations, and workflows. As of XD7 RTM, there are 201 individual TestID’s, which can be returned by running the Get-EnvTestDefinition cmdlet:

TestId

------

Host_CdfEnabled

Host_FileBasedLogging

Host_DatabaseCanBeReached

Host_DatabaseVersionIsRequiredVersion

Host_XdusPresentInDatabase

Host_RecentDatabaseBackup

Host_SchemaNotModified

Host_SnapshotIsolationState

Host_SqlServerVersion

Host_FirewallPortsOpen

Host_UrlAclsCorrect

Host_CheckBootstrapState

Host_ValidateStoredCsServiceInstances

Host_RegisteredWithConfigurationService

Host_CoreServiceConnectivity

Host_PeersConnectivity

Host_Host_Connection_HypervisorConnected

Host_Host_Connection_MaintenanceMode...

The tests are broken down into several functional groups that align with the various broker services, including Host, Configuration, MachineCreation, etc, and are named as such. For example, the test to verify that the site database can be connected to by the Configuration service is called Configuration_DatabaseCanBeReached.

Each test has a description of it’s function, and a test scope that dictates what type of object(s) can be tested. Tests can be executed against components and objects in the site according to the TestScope and/or TargetObjectType, and are executed by the service Synchronously or Aynchronously, depending on their InteractionModel. You can view all of the details about a test by passing the TestID to the Get-EnvTestDefinition cmdlet; for example:

PS C:> Get-EnvTestDefinition -TestId Configuration_DatabaseCanBeReached

Description : Test the connection details can be used to

connect successfully to the database.

DisplayName : Test the database can be reached.

InteractionModel : Synchronous

TargetObjectType :

TestId : Configuration_DatabaseCanBeReached

TestScope : ServiceInstance

TestSuiteIds : {Infrastructure}

TestSuites are groups of tests executed in succession to validate groups of component, as well as their interactions and workflows. The Get-EnvTestSuite cmdlet returns a list of test suite definitions, and can be used to find out what tests a suite is comprised of. To get a list of TestSuiteIDs, for example, you can run a Get-EnvTestSuite | Select TestSuiteID, which returns all of the available test suites:

TestSuiteId

-----------

Infrastructure

DesktopGroup

Catalog

HypervisorConnection

HostingUnit

MachineCreation_ProvisioningScheme_Basic

MachineCreation_ProvisioningScheme_Collaboration

MachineCreation_Availability

MachineCreation_Identity_State

MachineCreation_VirtualMachine_State

ADIdentity_IdentityPool_Basic

ADIdentity_IdentityPool_Provisioning

ADIdentity_WhatIf

ADIdentity_Identity_Available

ADIdentity_Identity_State

Each of these suites can be queried using the same cmdlet, and passing the -TestSuiteID of the suite in question. Let’s take DesktopGroup as an example:

PS C:\> Get-EnvTestSuiteDefinition -TestSuiteId DesktopGroup

TestSuiteId Tests

----------- -----

DesktopGroup Check hypervisor connection, Check connection maintenance mode, Ch...

One thing you’ll notice with the results of this cmdlet is that the list of tests are truncated, which is a result of the default stdout formatting in the PowerShell console. For that reason, my preferred method of looking at objects with large strings (ie descriptions) in PowerShell, is to view them in a graphical ISE (PowerGUI is my preference) and explore the objects in the ‘Variables’ pane.

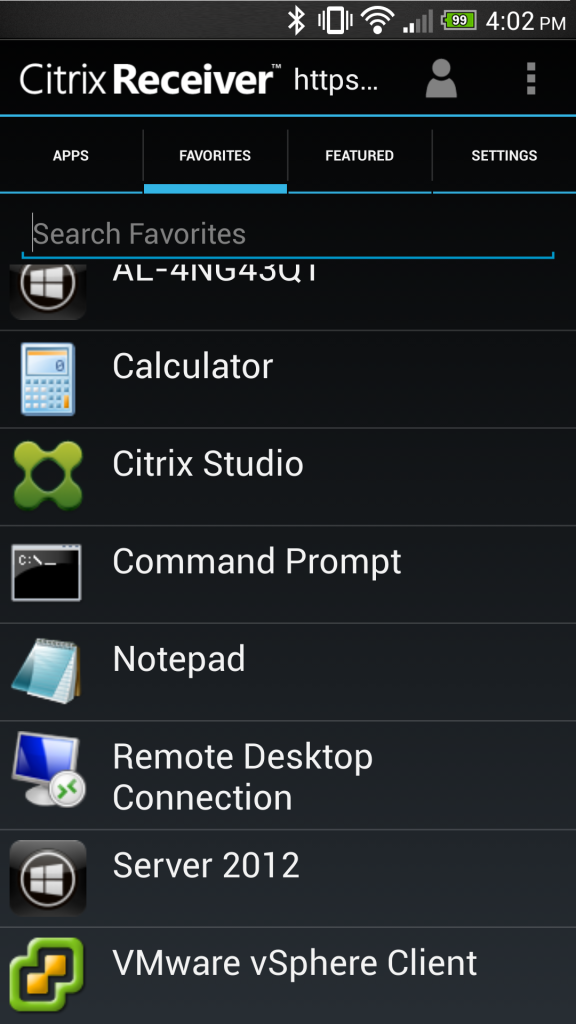

For example, if you store the results of Get-EnvTestSuiteDefinition -TestSuiteId DesktopGroup into a variable ($dgtest) in PowerGUI, each Test object that comprises the test suite can be inspected individually:

The DesktopGroup EnvTestSuite object

To start a test task, use the Start-EnvTestTask, passing the TestID or, alternatively, the TestSuiteID, and a target object (as needed). For example:

PS C:> Start-EnvTestTask -TestId Configuration_DatabaseCanBeReached

Active : False

ActiveElapsedTime : 11

CompletedTests : 1

CompletedWorkItems : 11

CurrentOperation :

DateFinished : 9/16/2013 11:33:31 PM

DateStarted : 9/16/2013 11:33:20 PM

DiscoverRelatedObjects : True

DiscoveredObjects : {}

ExtendedProperties : {}

Host :

LastUpdateTime : 9/16/2013 11:33:31 PM

Metadata : {}

MetadataMap : {}

Status : Finished

TaskExpectedCompletion :

TaskId : 03f5480d-68e8-410a-9da4-5e65d96ac393

TaskProgress : 100

TerminatingError :

TestIds : {Configuration_DatabaseCanBeReached}

TestResults : {Configuration_DatabaseCanBeReached}

TestSuiteIds : {}

TotalPendingTests : 1

TotalPendingWorkItems : 11

Type : EnvironmentTestRun

Once you know what tests there are, what they do, and what types of results to expect, health check scripts can easily be created using this service. Combinations of tests and test suites can, and should, be leveraged as needed to systematically validate XenDesktop 7 site components and functionality.

I plan on using these cmdlets to some extent in SiteDiag, and expect to get some good use out of this new service in the field. I’m interested to hear from anyone else who’s started using this snap-in, and if they’ve come up with any useful scripts.